A political scientist named Andrew Hacker had an opinion piece in this past Sunday’s New York Times headlined “Is Algebra Necessary?” It begins

A typical American school day finds some six million high school students and two million college freshmen struggling with algebra. In both high school and college, all too many students are expected to fail. Why do we subject American students to this ordeal? I’ve found myself moving toward the strong view that we shouldn’t.

I guess no one who knows me will be too shocked to hear that I disagree.

Before going any further, let me stipulate something. I am not claiming that our math curriculum is perfect as it is. I suspect that certain changes, particularly an increased emphasis on mathematical intuition and data literacy at the expense of more abstract topics, might be a good idea. In fact, if Hacker had taken aim at calculus instead of algebra, I might be largely with him. Many college-bound students who get sent off on the death march towards calculus would be better off truly mastering more basic ideas instead. But algebra’s the wrong target for his ire.

Here’s the simple, obvious point. If you don’t teach algebra in high school, you are closing huge numbers of doors into careers in the STEM fields (that’s science, technology, engineering, and mathematics). Hacker disputes this with an anecdote about Toyota partnering with a community college to teach employees “machine tool mathematics” to indicate that it’s OK if high schools don’t teach these skills. Count me unconvinced.

STEM covers a bunch of different things, but let’s focus on just the E part. If you haven’t learned algebra (at least) in high school, you will not be an engineer. Period. (I urge Hacker to walk over to the pre-engineering program at his university and ask the folks there.) If you take algebra out of the standard high school curriculum, you will not train American engineers. Needless to say, high school students in the rest of the world will not unilaterally disarm and stop learning algebra because we do. Our teenagers will simply not be able to compete.

The same goes for a bunch of jobs in the S and T parts of STEM, and of course all of the M part. For instance, any job that involves understanding how a computer program works — not just software engineers but pretty much anything peripherally related to the field — requires understanding the ideas of variables and functions, otherwise known as algebra.

Hacker says

It would be far better to reduce, not expand, the mathematics we ask young people to imbibe. (That said, I do not advocate vocational tracks for students considered, almost always unfairly, as less studious.)

That parenthetical comment at the end is extremely ironic. The result of the changes he proposes would be tracking on a massive scale, in which only the small minority of students whose parents have the wherewithal to put them into special programs or private schools are eligible for many of the best jobs.

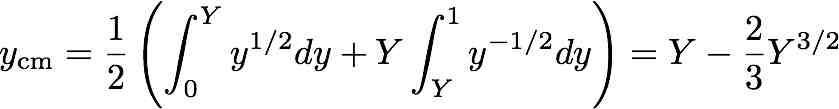

Hacker suggests that we should be teaching courses in things like “citizen statistics,” which “familiarize students with the kinds of numbers that describe and delineate our personal and public lives.” For example, such a course

could, for example, teach students how the Consumer Price Index is computed, what is included and how each item in the index is weighted — and include discussion about which items should be included and what weights they should be given.

I’m 100% for this. But here’s the thing. Understanding even the idea of a weighted average involves (basic) algebra.

By all means, let’s teach algebra and related subjects in ways that relate to important topics. Let’s emphasize why the tools in these courses are important, even if that means de-emphasizing some of the more formal and abstract aspects of the subject. To steal one of Hacker’s examples, I’m OK if not all students become proficient at factoring polynomials. If we swap that out in favor of improving skills at reading and interpreting graphs, for instance, that’d be fine. But the main ideas of algebra — particularly the idea that you can figure things out about variables in general, rather than having to work out each case one at a time, and the idea of a function — are essential for all kinds of basic quantitative reasoning.

A couple of little final notes.

In his concluding paragraph Hacker says

Yes, young people should learn to read and write and do long division, whether they want to or not.

Actually, long division would be pretty high up on my list of things that could be jettisoned. We don’t teach people to do “long square roots” (yes, there is an algorithm for computing square roots by hand). Long division is only marginally more useful.

Then there’s medicine. Hacker notes

Medical schools like Harvard and Johns Hopkins demand calculus of all their applicants, even if it doesn’t figure in the clinical curriculum, let alone in subsequent practice. Mathematics is used as a hoop, a badge, a totem to impress outsiders and elevate a profession’s status.

It’s true that calculus isn’t really necessary for doctors (and neither, truth be told, is most of the physics they’re supposed to learn). I think the reason that stuff is in the pre-med curriculum is partly a status thing as he suggests, but also just a general test of intelligence and ability to learn difficult things. To be honest, I’d be worried about being treated by a doctor who didn’t have the ability and perseverance to learn basic calculus, even though they don’t actually need to use calculus to treat me. But if you replaced calculus by something equally challenging, I’d be OK with that.