My brother Andy pointed me to this discussion on Tamino’s Open Mind blog of Bayesian vs. frequentist statistical methods. It’s focused on a nice, clear-cut statistics problem from a textbook by David MacKay, which can be viewed in either a frequentist or Bayesian way:

We are trying to reduce the incidence of an unpleasant disease called microsoftus. Two vaccinations, A and B, are tested on a group of volunteers. Vaccination B is a control treatment, a placebo treatment with no active ingredients. Of the 40 subjects, 30 are randomly assigned to have treatment A and the other 10 are given the control treatment B. We observe the subjects for one year after their vaccinations. Of the 30 in group A, one contracts microsoftus. Of the 10 in group B, three contract microsoftus. Is treatment A better than treatment B?

Tamino reproduces MacKay’s analysis and then proceeds to criticize it in strong terms. Tamino’s summary:

Let  be the probability of getting "microsoftus" with treatment A, while

be the probability of getting "microsoftus" with treatment A, while  is the probability with treatment B. He adopts a uniform prior, that all possible values of

is the probability with treatment B. He adopts a uniform prior, that all possible values of  and

and  are equally likely (a standard choice and a good one). "Possible" means between 0 and 1, as all probabilities must be.

are equally likely (a standard choice and a good one). "Possible" means between 0 and 1, as all probabilities must be.

He then uses the observed data to compute posterior probability distributions for  . This makes it possible to computes the probability that

. This makes it possible to computes the probability that  (i.e., that you’re less likely to get the disease with treatment A than with B). He concludes that the probability is 0.990, so there’s a 99% chance that treatment A is superior to treatment B (the placebo).

(i.e., that you’re less likely to get the disease with treatment A than with B). He concludes that the probability is 0.990, so there’s a 99% chance that treatment A is superior to treatment B (the placebo).

Tamino has a number of objections to this analysis, which I think I agree with, although I’d express things a bit differently. To me, the problem with the above analysis is precisely the part that Tamino says is “a standard choice and a good one”: the choice of prior.

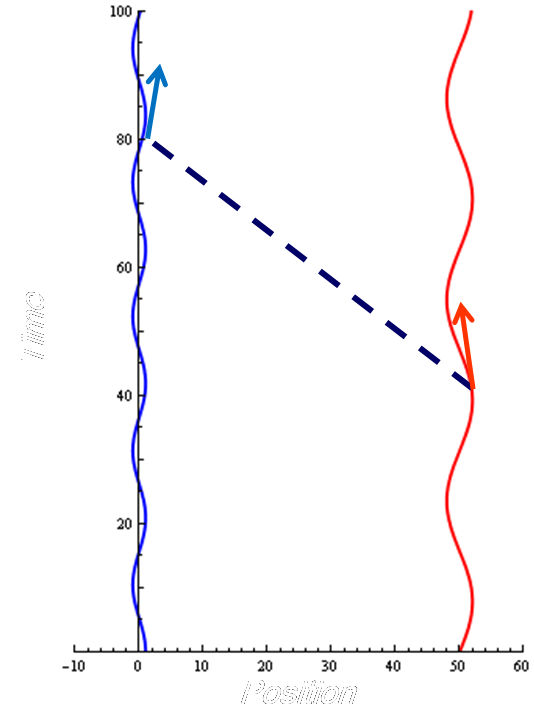

MacKay’s choice of prior expresses the idea that, before looking at the data, we thought that all possible pairs of probabilities ( ) were equally likely. That prior is very unlikely to be an accurate reflection of our actual prior state of belief regarding the drug. Before you looked at the data, you probably thought there was a non-negligible chance that the drug had no significant effect at all — that is, that the two probabilities were exactly (or almost exactly) equal. So in fact your prior probability was surely not a constant function on the (

) were equally likely. That prior is very unlikely to be an accurate reflection of our actual prior state of belief regarding the drug. Before you looked at the data, you probably thought there was a non-negligible chance that the drug had no significant effect at all — that is, that the two probabilities were exactly (or almost exactly) equal. So in fact your prior probability was surely not a constant function on the ( ) plane — it had a big ridge running down the line

) plane — it had a big ridge running down the line  =

=  . An analysis that assumes a prior without such a ridge is an analysis that assumes from the beginning that the drug has a significant effect with overwhelming probability. So the fact that he concludes the drug has an effect with high probability is not at all surprising — it was encoded in his prior from the beginning!

. An analysis that assumes a prior without such a ridge is an analysis that assumes from the beginning that the drug has a significant effect with overwhelming probability. So the fact that he concludes the drug has an effect with high probability is not at all surprising — it was encoded in his prior from the beginning!

The nicest way to analyze a situation like this from a Bayesian point of view is to compare two different models: one where the drug has no effect and one where it has some effect. MacKay analyzes the second one. Tamino goes on to analyze both cases and compare them. He concludes that the probability of getting the observed data is 0.00096 under the null model (drug has no effect) and 0.00293 under the alternative model (drug has an effect).

How do you interpret these results? The ratio of these two probabilities is about 3. This ratio is sometimes called the Bayesian evidence ratio, and it tells you how to modify your prior probability for the two models. To be specific,

Posterior probability ratio = Prior probability ratio x evidence ratio.

For instance, suppose that before looking at the data you thought that there was a 1 in 10 chance that the drug would have an effect. Then the prior probability ratio was (1/10) / (9/10), or 1/9. After you look at the data, you “update” your prior probability ratio to get a posterior probability ratio of 1/9 x 3, or 1/3. So after looking at the data, you now think there’s a 1/4 chance that the drug has an effect and a 3/4 chance that it doesn’t.

Of course, if you had a different prior probability, then you’d have a different posterior probability. The data can’t tell you what to believe; it can just tell you how to update your previous beliefs.

As Tamino says,

Perhaps the best we can say is that the data enhance the likelihood that the treatment is effective, increasing the odds ratio by about a factor of 3. But, the odds ratio after this increase depends on the odds ratio before the increase €” which is exactly the prior we don't really have much information on!

People often make statement like this as if they’re pointing out a flaw in the Bayesian analysis, but this isn’t a bug in the Bayesian analysis — it’s a feature! You shouldn’t expect the data to tell you the posterior probabilities in a way that’s independent of the prior probabilities. That’s too much to ask. Your final state of belief will be determined by both the data and your prior belief, and that’s the way it should be.

Incidentally, my research group’s most recent paper has to do with a problem very much like this situation: we’re considering whether a particular data set favors a simple model, with no free parameters, or a more complicated one. We compute Bayesian evidence ratios just like this, in order to tell you how you should update your probabilities for the two hypotheses as a result of the data. But we can’t tell you which theory to believe — just how much your belief in one should go up or down as a result of the data.