Andrew Gould posted a strange little paper on the arxiv. Title and abstract:

The Most Precise Extra-Galactic Black-Hole Mass Measurement

I use archival data to measure the mass of the central black hole in NGC 4526, M = (4.70 +- 0.14) X 10^8 Msun. This 3% error bar is the most precise for an extra-galactic black hole and is close to the precision obtained for Sgr A* in the Milky Way. The factor 7 improvement over the previous measurement is entirely due to correction of a mathematical error, an error that I suggest may be common among astronomers.

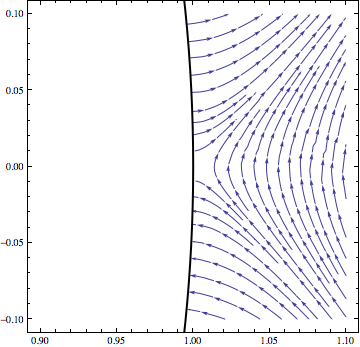

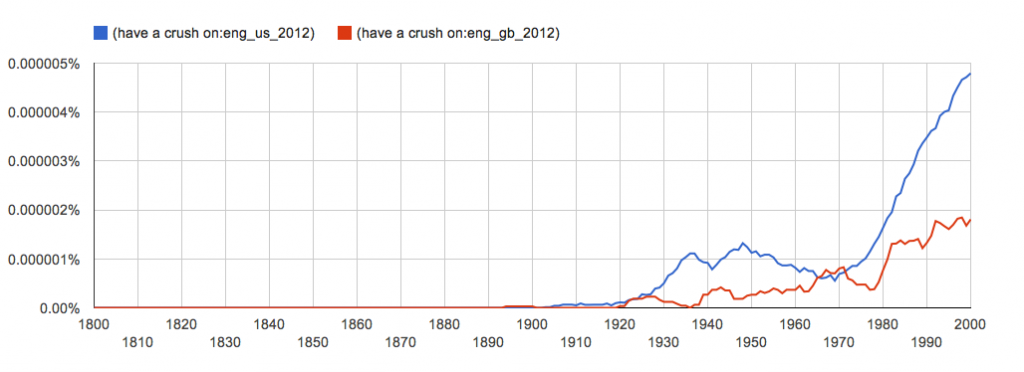

The story goes like this. A paper was published in Nature giving a measurement of the mass of the black hole in question. (Full article access from Nature is paywalled. Here’s the free Arxiv version, which appears to be substantively identical.) The key figure:

According to Gould, “The contours of Figure 2 of Davis et al. (2013) reflect χ2/dof.” That last bit is “chi-squared per degree of freedom,” also known as “reduced chi-squared.” If that’s true, then the authors have definitely made a mistake. The recipe described in the figure caption for turning chi-squared contours into error estimates is based on (non-reduced) chi-squared, not chi-squared per degree of freedom.

The Davis et al. paper doesn’t clearly state that Figure 2 shows reduced chi-squared, and Gould doesn’t say how he knows that it does, but comments in Peter Coles’s blog, which is where I first heard about this paper, seem to present a convincing case that Gould’s interpretation is right. See in particular this comment from Daniel Mortlock, which says in part

Since posting my comment above I have exchanged e-mails with Davis and Gould. Both confirm that the contours in Figure 2 of Davis et al. are in fact chi^2/red or chi^2/dof, so the caption is wrong and Gould’s re-evaluation of the uncertainty in M_BH is correct . . .

. . . at least formally. The reason for the caveat is that, according to Davis, there are strong correlations between the data-points, so the constraints implied by ignoring them (as both Davis and Gould have done so far) are clearly too tight.

So it appears that Gould correctly identified an error in the original analysis, but both papers cheerfully ignore another error that could easily be extremely large! Ignoring “strong correlations” when calculating chi-squareds is not OK.

So this is quite a messy story, but the conclusion seems to be that you shouldn’t have much confidence in either error estimate.

I find myself wondering about the backstory behind Gould’s paper. When you find an error like this in someone else’s work, the normal first step is to email the authors directly and give them a chance to sort it out. I wonder if Gould did this, and if so what happened next. As we all know, people are reluctant to admit mistakes, but the fact that reduced chi-squared is the wrong thing to use here is easily verified. (I guarantee you that at least some of the authors have a copy of the venerable book Numerical Recipes on their shelves, which has a very clear treatment of this.) Moreover, the error in question makes the authors’ results much stronger and more interesting (by reducing the size of the error bar), which should make it easier to persuade them.

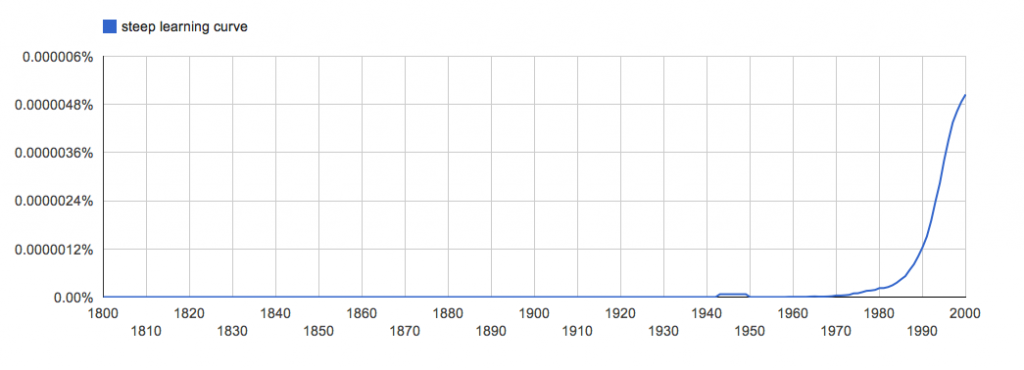

The other strange thing in Gould’s paper is the last phrase of the abstract: “an error that I suggest may be common among astronomers.” His evidence for this is that he saw one other paper recently (which he doesn’t cite) that contained the same error. Coming from someone who’s clearly concerned about correctly drawing inferences from data, this seems like weak evidence.

One last thing. Peter Coles’s post drawing attention to this paper casts it as a Bayesian-frequentist thing:

The “mathematical error” quoted in the abstract involves using chi-squared-per-degree-of-freedom instead of chi-squared instead of the full likelihood function instead of the proper, Bayesian, posterior probability. The best way to avoid such confusion is to do things properly in the first place. That way you can also fold in errors on the distance to the black hole, etc etc…

At first, I have to admit that I wasn’t sure whether he was joking here or not. Sometimes I have trouble recognizing that understated, dry British sense of humor. (I guess that’s why I never fully appreciated Benny Hill.)

Anyway, this isn’t about the whole Bayesian-frequentist business. The various errors involved here (using reduced chi-squared and ignoring correlations) are incorrect from either point of view. Suppose someone put a batch of cookies in a gas oven, forgot about them, and came back two hours later to find them burnt. You wouldn’t say, “See? That’s why electric ovens are much better than gas ovens.”

Peter’s riposte:

The problem is that chi-squared is used by too many people who don’t know (or care) what it is they’re actually trying to do. Set it up as a Bayesian exercise and you won’t be tempted to use an inappropriate recipe.

Or set it up as a frequentist exercise and you’ll do it right too, as long as you think clearly about what you’re doing. This is an argument against doing things without thinking about them carefully, nothing more.

As long as I’ve come this far, in case anyone’s wondering, here’s my position, to the extent I have one, on the Bayesian-frequentist business:

- The frequentist interpretation of the meaning of probabilities is incoherent.

- It’s perfectly OK to use frequentist statistical techniques, as long as you know what you’re doing and what the results of such an analysis mean. In particular, a frequentist analysis never tells you anything about the probability that a theory is true, just about the probability that, if a theory is true, the data that you see would occur.

- As a corollary of the last point, even if you’ve used frequentist techniques to analyze your data, when you want to draw conclusions about the probabilities that various theories are true, you have to use Bayesian inference. There’s simply no other coherent way to do this sort of reasoning. Even frequentists do this, although they may not admit it, even to themselves.

- Given that you have to use Bayesian inference anyway, it’s better to be open about it.