Something happened today that I’ll bet is unprecedented: Two unrelated articles on the front page of the New York Times were about physicists. One has important policy and natural security implications, so I’m going to talk about the other one, which was written by Dennis Overbye. In the print edition that Luddites like me have, it’s headlined “Why Are We Here? Let’s Just Thank Our Lucky Muons We Are”. The online article is headlined “A New Clue to Explain Existence.”

The article is about a new result out of Fermilab, showing roughly that matter and antimatter behave differently from each other. (Here are slides from a talk on the results, and a technical article about them.) I don’t have the expertise for a technical analysis of the result, so instead I’ll say a bit about why it might be interesting.

First, though, a word on antimatter. In teaching and in casual conversations, I’ve noticed that many people seem to think that antimatter is a science-fiction term like dilithium or the flux capacitor. Not so. Antimatter exists. We make it in the lab all the time. It even exists in nature, although in “ordinary” environments like the Earth it exists only in very small quantities (some natural radioactive isotopes emit positrons, which are the antimatter version of electrons).

In fact, in almost every way antiparticles behave just like ordinary particles, just with the opposite charges: an antiproton behaves like a proton, except that the proton is positively charged and the antiproton is negatively charged; and similarly for all the other particles you can think of. (Some uncharged particles, like the photon, are their own antiparticles — that is, they’re simultaneously matter and antimatter. Some, like the neutron, have antiparticles that are distinct.)

This has led to a longstanding puzzle: If the laws of nature treat matter and antimatter the same way, how did the Universe come to contain huge amounts of matter and virtually no antimatter? Why isn’t there an anti-Earth, made of anti-atoms consisting of antiprotons, antineutrons, and antielectrons (also known as positrons)?

One response to this question is to decide you don’t care! In general, the laws of physics tell us how a system evolves from some given initial state; they don’t tell us what that initial state had to be. Maybe the Universe was just born this way, with matter and not antimatter.

Most physicists reject that response as unsatisfying. Here’s one reason. We have strong evidence that the early Universe was extremely hot. At these ultrahigh temperatures, particle collisions should have been producing tons of pairs of particles and antiparticles, and other collisions were causing those particle-antiparticle pairs to annihilate each other. In this hot early state, there were, on average, about a billion times as many protons as there are today, and an almost exactly equal number of antiprotons. Eventually, as the Universe cooled, collisions stopped producing these particle-antiparticle pairs, and pretty much all of the matter and antimatter annihilated each other.

This means that, if you want to believe that the Universe was born with an excess of matter over antimatter, you have to believe that it was born with a very slight excess: for every billion antiprotons, there were a billion and one protons. That way, when the Universe cooled, and the Great Annihilation occurred, there were enough protons (and electrons and neutrons) left over to explain what we see today. That’s a possible scenario, but it doesn’t sound very likely that the Universe would have been constructed in that way.

(By the way, this is what physicists like to call a “naturalness” or a “fine-tuning” argument. It’s a dangerous way to argue. Endless, pointless disputes can be had for the asking, simply by claiming that someone else’s pet theory is “unnatural” or “fine-tuned.” In this case, pretty much everyone agrees about the unnaturalness, but maybe pretty much everyone is wrong!)

It’s much more satisfying to imagine that there is a cause for the prevalence of matter over antimatter. Such a cause would have to involve a difference in the laws of physics between matter and antimatter. There are such differences: in some ways antiparticles do behave very slightly differently from their particle counterparts. (That’s why I threw in weaselly language such as “In almost every way” earlier.)

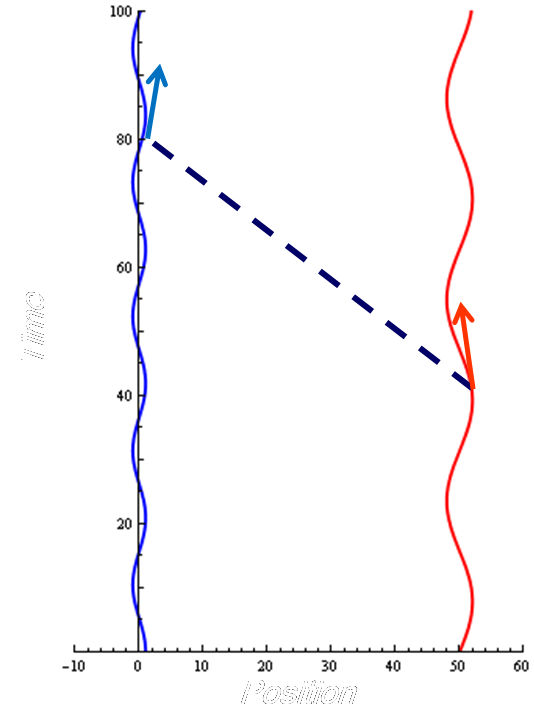

To explain the prevalence of matter over antimatter, it turns out that you need (among other things) a specific kind of difference between matter and antimatter known as CP violation. CP violation was first observed in the 1960s. (Incidentally, I had a summer research job when I was in college working for one of the discoverers.) Very roughly speaking, certain types of particles can oscillate back and forth between matter and antimatter versions. That sounds strange, but all by itself it wouldn’t be CP violation, as long as there was a symmetry between both halves of the oscillation (matter –> antimatter and antimatter –> matter). But there’s not. The particles spend slightly more time in one form than in the other. That’s CP violation.

So what’s the new result? Basically, it’s a new example of CP violation, which doesn’t fit into the framework established in the “standard model” of particle physics. Certain particles (a type of B meson), when they decay, are slightly more likely to decay into negatively charged muons (“matter”) than into positively charged muons (“antimatter”). In the standard model you’d expect these particles to be equally happy to produce matter and antimatter, at least to much higher precision than the observed asymmetry.

This is important to particle physicists, but why is it important to Dennis Overbye and the New York Times? The main reason is that the type of asymmetry seen here is a necessary step in solving the puzzle of the matter-antimatter asymmetry in the Universe — that is, why any protons and electrons were left over after the Great Annihilation. But it’s worth emphasizing that this discovery does not actually solve that problem. As far as I can tell, this experiment doesn’t lead to a model of the early Universe in which the matter-antimatter asymmetry is explained. It just means there’s a glimmer of hope that, at some point in the future, such a model will be established.

To particle physicists, the result is important even if it doesn’t solve the matter asymmetry problem, simply because it appears to be “new physics.” Since the 1970s, there has been a “standard model” of particle physics that has successfully explained the result of every experiment in the field. While it’s great to have such a successful theory, it’s hard to make further progress without some unexplained results to try to explain. There are lots of reasons to believe that the standard model is not the complete word on the subject of particle physics, but without experimental guidance it’s been hard to know how to proceed in finding a better theory.

One final note: As with all new results, it’s entirely possible that this one will prove to be wrong! If the Fermilab physicists have done their analysis right, the result is significant at about 3.2 standard deviations, which means there’s less than a 0.14% chance of it occurring as a chance fluctuation. That’s a pretty good result, but we’ll have to wait and see if it’s confirmed by other experiments. Presumably the LHC at CERN can make a similar measurement.