When people talk about US politics, they often focus on the various candidates’ “electability”. In particular, they talk about basing their support for a given candidate in the primary on how likely that candidate is to win the election.

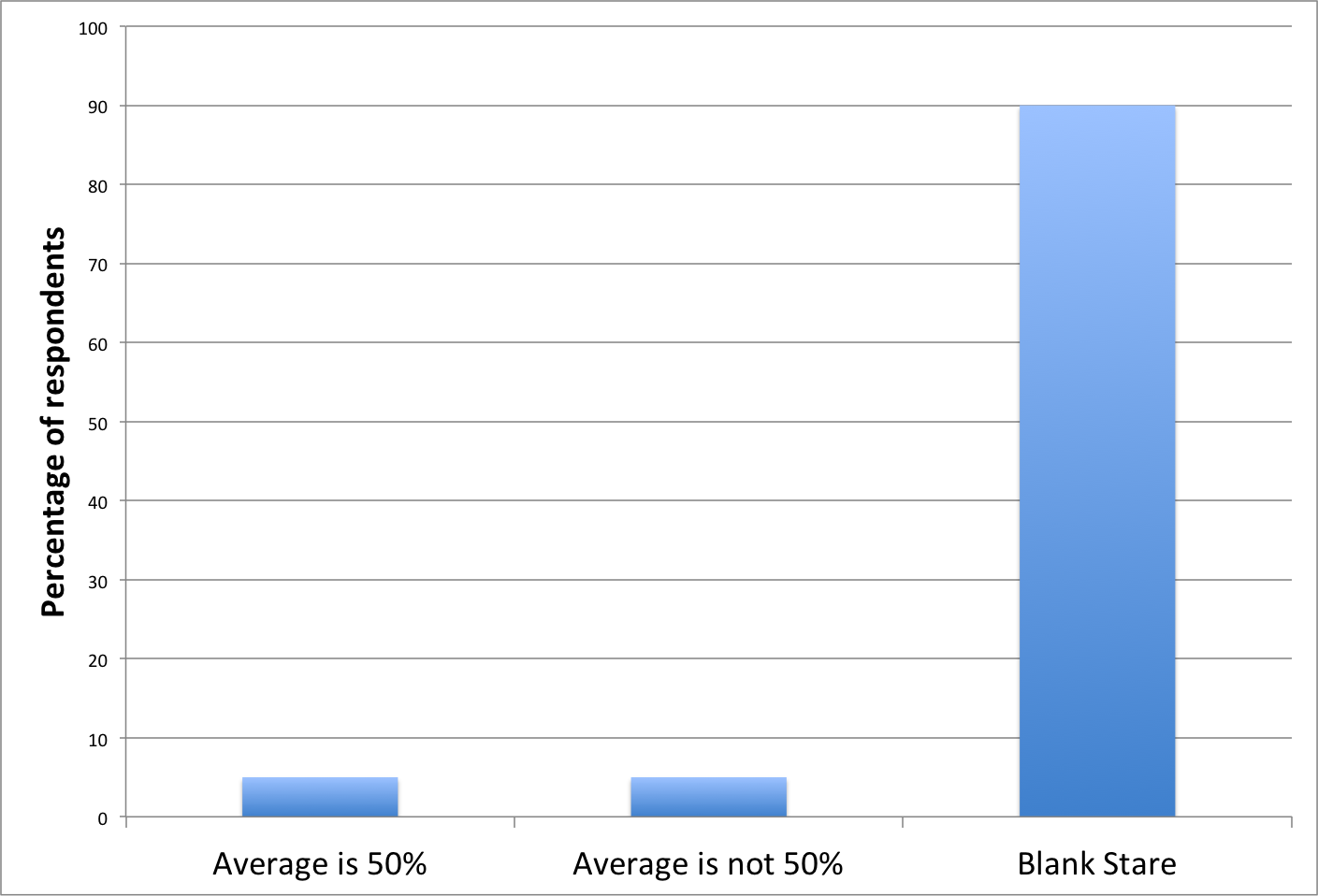

This is a perfectly reasonable thing to think about, of course. If your primary goal is, say, to get a Democrat into the White House, then it makes sense to pick the Democrat who’s most likely to get there, even if that’s not your favorite candidate. The only problem is that, I suspect, people are often quite bad at guessing who’s the most electable candidate.

Eight years ago, I observed (1,2,3) that there is one source of data that might help with this, namely the political futures markets. These are sites where bettors can place bets on the outcomes of the elections. The odds available on the market at any given time show the probabilities that the bettors are willing to assign to the various outcomes. For instance, as of yesterday, at the PredictIt market, you could place a bet of $88 that would pay off $100 if Hillary Clinton wins the Democratic nomination. This means that the market “thinks” Clinton has an 88% chance of getting the nomination.

To assess a candidate’s electability, you want the conditional probability that the candidate wins the election, if he or she wins the nomination. The futures markets don’t tell you those probabilities directly, but you can get them from the information they do give.

Here’s a fundamental law of probability:

P(X becomes President) = P(X is nominated) * P(X becomes President, given that X is nominated).

The last term, the conditional probability, is the candidate’s “electability”. PredictIt lets you bet on whether a candidate will win the nomination, and on whether a candidate will win the general election. The odds for those bets tell you the other two probabilities in that equation, so you can get the electability simply by dividing one by the other.

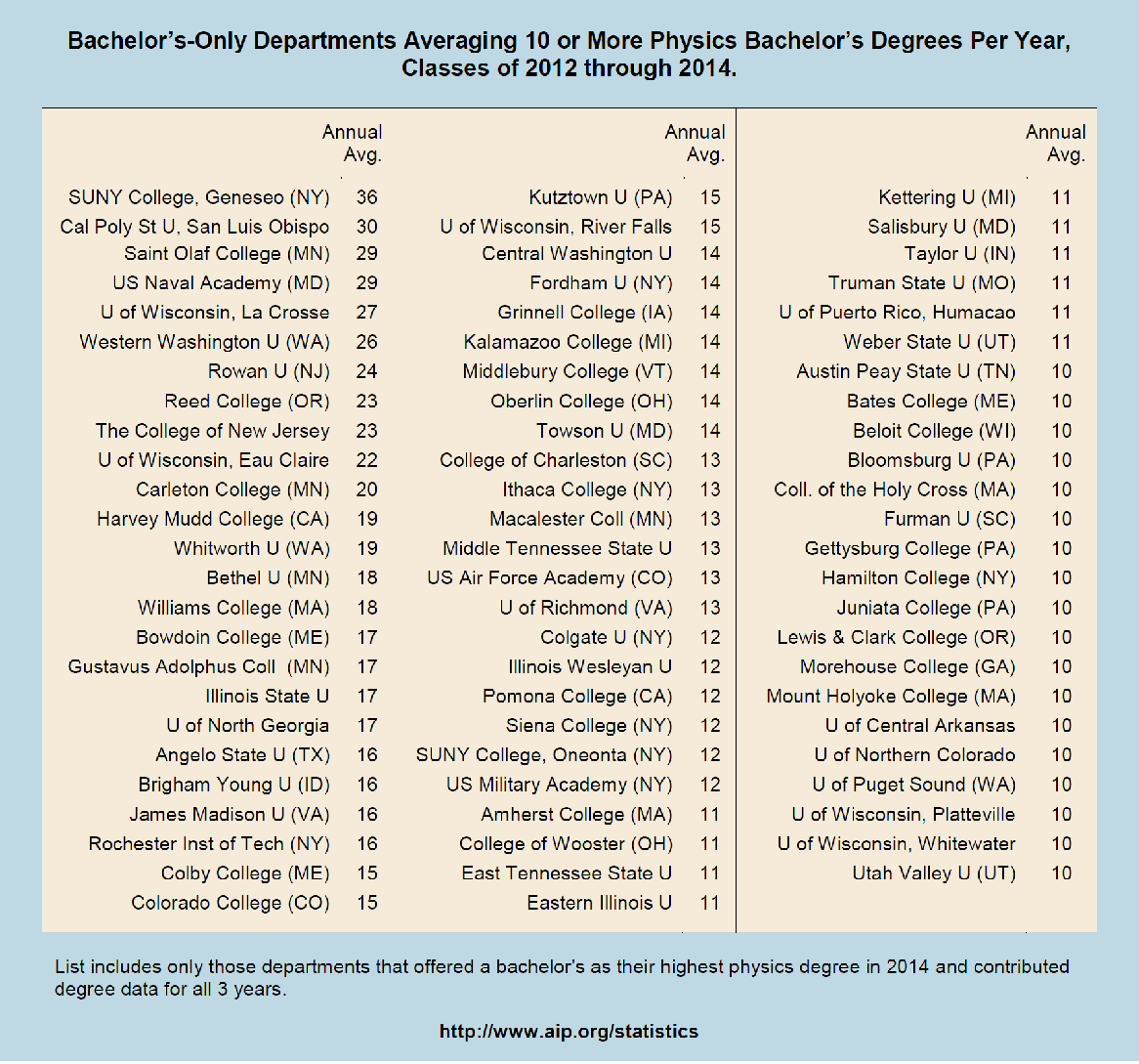

So, as of Saturday, December 5, here’s what the PredictIt investors think about the various candidates:

| Party | Candidate | Nomination Probability | Election Probability | Electability |

| Democrat | Clinton | 88.5

| 57.5 | 65 |

| Democrat | Sanders | 12.5 | 6.5 | 52 |

| Republican | Bush | 9.5 | 4.5 | 47 |

| Republican | Cruz | 25.5 | 10.5 | 41 |

| Republican | Rubio | 39.5 | 19.5 | 49 |

| Republican | Trump | 25.5 | 15.5 | 61 |

(In case you’re wondering, the 0.5’s are because PredictIt has a 1% difference between the buy and sell prices on all these contracts. I went with the average of the two. They include other candidates, with lower probabilities, but I didn’t include them in this table.)

I’ve heard lots of people on the left say that they hope Donald Trump wins the nomination, because he’s unelectable — that is, the Democrat would surely beat him in the general election. I don’t know if that’s true or not, but it’s sure not what this market is saying.

Of course, the market could be wrong. If you think it is, then you have a chance to make some money. In particular, if you do think that Trump is unelectable, you can go place a bet against him to win the general election.

To be more specific, suppose that you are confident the market has overestimated Trump’s electability. That means that they’re either overestimating his odds of winning the general election, or they’re underestimating his odds of getting the nomination. If you think you know which is wrong, then you can bet accordingly. If you’re not sure which of those two is wrong, you can place a pair of bets: one that he’ll lose the general election, and one that he’ll win the nomination. Choose the amounts to hedge your bet, so that you break even if he doesn’t get the nomination. This amounts to a direct bet on Trump’s electability. If you’re right that his electability is less than 61%, then this bet will be at favorable odds.

So to all my lefty friends who say they hope Trump wins the nomination, so that Clinton (or Sanders) will stroll into the White House, I say put your money where your mouth is.