Some 19th-century skeptic is supposed to have said that all churches should be required to bear the inscription “Important If True” above their doors. (Google seems to credit Alexander William Kinglake, whoever he was.) That’s pretty much what I think about the big announcement yesterday of the measurements of cosmic microwave background polarization by BICEP2.

This result has gotten a lot of news coverage, which is fair enough: if it holds up, it’s a very big deal. But personally, I’m still laying quite a bit of stress on the “if true” part of “important if true.” I don’t mean this as any sort of criticism of the people behind the experiment: they’ve accomplished an amazing feat. But this is an incredibly difficult observation, and at this point I can’t manage to regard the results as more than an extremely exciting suggestion of something that might turn out to be true.

Incidentally, I have to point out the most striking quotation I saw in any of the news reports. My old friend Max Tegmark is quoted in the New York Times as saying

I think that if this stays true, it will go down as one of the greatest discoveries in the history of science.

A big thumbs-up to Max for the first clause: lots of people who should know better are leaving that out (unless nefarious editors are to blame). But the main clause of the sentence is frankly ludicrous. It’s natural (and even endearing) that Max is excited about this result, but this isn’t natural selection, or quantum mechanics, or conservation of energy, or the existence of atoms, to name just a few of the “greatest discoveries in the history of science.”

I’ll say a bit about why this result is important, then a bit about why I’m still skeptical. Finally, since the only way to think coherently about any of this stuff is with Bayesian reasoning, I’ll say something about that.

Important

I’m not going to try to explain the science in detail right now. (Other people have.) But briefly, it goes like this. For about 30 years now, cosmologists have suspected that the Universe went through a brief period known as “inflation” at very early times, perhaps as early as 10-35 seconds after the Big Bang.. During inflation, the Universe expanded extremely — almost inconceivably — rapidly. According to the theory, many of the most important properties of the Universe as it exists today originate during inflation.

Quite a bit of indirect evidence supporting the idea of inflation has accumulated over the years. It’s the best theory anyone has come up with for the early Universe. But we’re still far from certain that inflation actually happened.

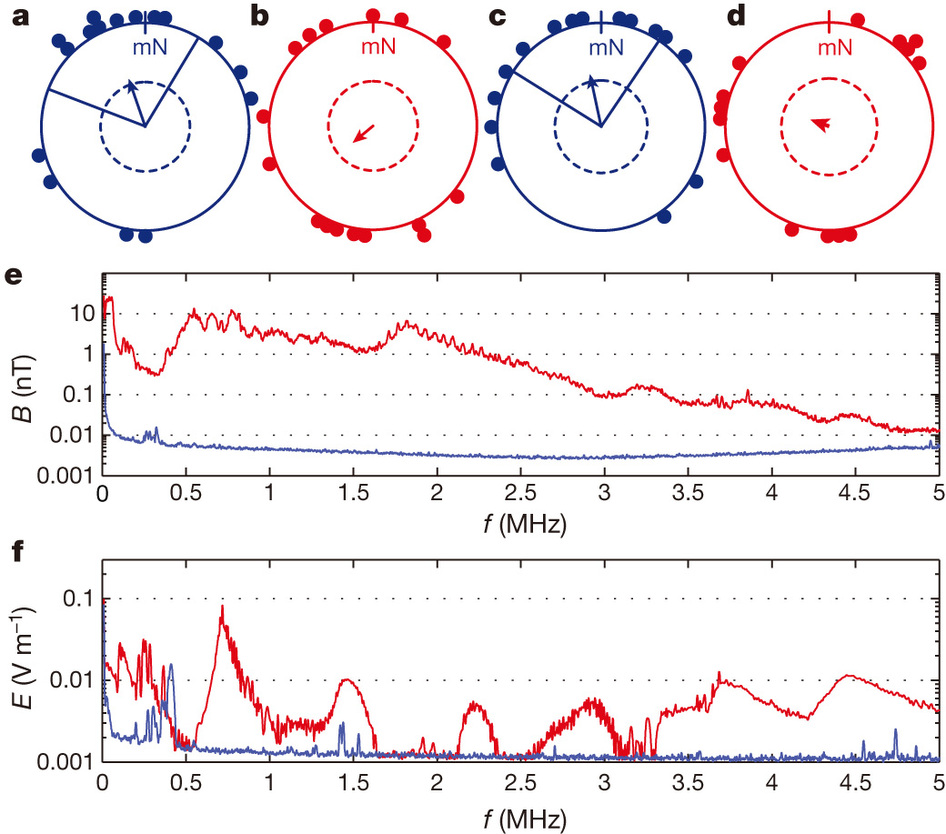

For quite a while now, people have known about a potentially testable prediction of inflation. During the inflationary period, there should have been gravitational waves (ripples in spacetime) flying around. Those gravitational waves should leave an imprint that can still be seen much later, specifically in observations of the cosmic microwave background radiation (the oldest light in the Universe). To be specific, the polarization of this radiation (i.e., the orientation of the electromagnetic waves we observe) should vary across the sky in a way that has a particular sort of geometric pattern. In the jargon of the field, we should expect to see B-mode microwave background polarization on large angular scales.

That’s what BICEP2 appears to have observed.

If this is correct, it’s a much more direct confirmation of inflation than anything we’ve seen before. It’s very hard to think of any alternative scenario that would produce the same pattern as inflation, so if this pattern is really seen, then it’s very strong evidence in favor of inflation. (The standard metaphor here is “smoking gun.”)

If True

(Let me repeat that I don’t mean the following as any sort of criticism of the BICEP2 team. I don’t think they’ve done anything wrong; I just think that these experiments are hard! It’s pretty much inevitable that the first detection of something like this would leave room for doubt. It’s very possible that these doubts will turn out to be unfounded.)

One big worry in this field is foreground contamination. We look at the microwave background through a haze of nearby stuff, mostly stuff in our own Galaxy. An essential part of this business is to distinguish the primordial radiation from these local contaminants. One of the best ways to do this is to observe the radiation at multiple frequencies. The microwave background signal has a known spectrum — that is, the relative amplitudes at different frequencies are fixed — which is different from the spectra of various contaminants.

The (main) data set used to derive the new results was taken at one frequency, which doesn’t allow for this sort of spectral discrimination. The authors of the paper do use additional data at other frequencies, but I’ll be much happier once those data get stronger.

I should say that the authors do give several lines of argument suggesting that foregrounds aren’t the main source of the signal they see, and at least some other people I respect don’t seem as worried about foregrounds as I am, so maybe I’m wrong to be worried about this. We will get more foreground information soon, e.g., from the Planck satellite, so time will tell.

There are other hints of odd things in the data, which may not mean anything. Matt Strassler lays out a couple. One more thing someone pointed out (can’t immediately track down who): the E-type polarization significantly exceeds predictions in precisely the region (l=50 or so) where the B signal is most significant. The E signal is larger / easier to measure than the B signal. Is this a hint of something wrong?

I’m actually more worried about the problem of “unknown unknowns.” The team has done an excellent job of testing for a wide variety of systematic errors and biases, but I worry that there’s something they (and we) haven’t thought of yet. That seems unfair: how can I ding them for something that nobody’s even thought of? But nonetheless I worry about it.

The solution to that last problem is for another experiment to confirm the results using different equipment and analysis techniques. That’ll happen eventually, so once again, time will tell.

(Digression: I always thought it odd that people mocked Donald Rumsfeld for talking about “unknown unknowns.” I think it was the smartest thing he ever said.)

What Bayes has to say

This section is probably mostly for Allen Downey, but if you’re not Allen, you’re welcome to read it anyway.

My campaign to rename “Bayesian reasoning” with the more accurate label “correct reasoning” hasn’t gotten off the ground for some reason, but the fact remains that Bayesian probabilities are the only coherent way to think about situations like this (and practically everything else!) where we don’t have enough information to be 100% sure.

This paper is definitely evidence in favor of inflation.

P1 = P(BICEP2 observes what it did | inflation happened)

is significantly greater than

P2 = P(BICEP2 observes what it did | inflation didn’t happen)

so your estimate of the probability that inflation happened should go up based on this new information.

The question is how much it should go up. I’m not going to try to be quantitative here, but I do think there are a couple of observations worth making.

First, all the stuff in the previous section goes into one’s assessment of P2. Without the possibility of foregrounds or undiagnosed systematic errors messing things up, the P2 would be extremely tiny. Your assessment of how likely those problems are is what determines your value of P2 and hence the strength of the evidence.

But there’s more to it than just that. “Inflation” is not just a theory; it’s a family of theories. In particular, it’s possible for inflation to have happened at different energy scales (essentially, different times after the Big Bang), which leads to different predictions for the B-mode amplitude. The amplitude BICEP2 detected is very close to the upper limit on what would have been possible, based on previous information. In fact, in the simplest models, the amplitude BICEP2 sees is inconsistent with previous data; to make everything fit, you have to go to slightly more complicated models. (For the cognoscenti, I’m saying that you seem to need some running of the spectral index to make BICEP2’s amplitude consistent with TT observations.) That makes P1 effectively smaller, reducing the strength of the evidence for inflation.

What I’m saying here is that the tension between BICEP2 and other sources of information makes it more likely that there’s something wrong.

Formally, instead of talking about a single number P1, you should talk about

P1(r,…) = P(BICEP2 observes what it did | r, …).

Here r is the amplitude of the signal produced in inflation and … refer to the additional parameters introduced by the fact that you have to make the model more complicated.

Then the probability that shows up in a Bayesian evidence calculation is the integral of P1(r,…) times a prior probability on the parameters. The thing is that the values of r where P1(r,…) is large are precisely those that have low prior probability (because they’re disfavored by previous data). Also, the more complicated models (with those extra “…” parameters) are in my opinion less likely a priori than simple models of inflation.

So I claim, when properly integrated over the priors, P1 isn’t as large as you might have thought, and so the evidence for inflation isn’t as high as it might seem.

Of course, it’s hard to be quantitative about this. I could make up some numbers, but they’d just be illustrative, so I don’t think they’d add much to the argument.