The 2009 Goldwater Scholarships were announced today. I’m pleased to announce that two University of Richmond students were recognized: Matt Der was awarded a scholarship, and Anna Parker received an honorable mention. In both cases, the honor is extremely well-deserved. If you know these two students, please make sure to congratulate them.

Category: Uncategorized

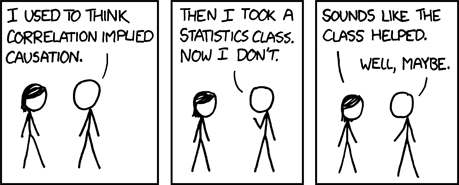

Correlation is not causation

The Economist should know better

I generally think of the Economist as having excellent science reporting, but I have to admit that I only read it sporadically these days, so maybe my information is out of date. This article on some recent experiments in the foundations of quantum mechanics is way, way below the standard I’d expect from them.

The article claims that

Now two groups of physicists, working independently, have demonstrated that nature is indeed real when unobserved.

Your skepticism level should spike whenever you read an article claiming that experimental results have confirmed some deep-sounding-yet-vague philosophical idea.

When the article gets down to details, it says a bunch of things that are just wrong:

In the 1990s a physicist called Lucien Hardy proposed a thought experiment that makes nonsense of the famous interaction between matter and antimatter€”that when a particle meets its antiparticle, the pair always annihilate one another in a burst of energy. Dr Hardy's scheme left open the possibility that in some cases when their interaction is not observed a particle and an antiparticle could interact with one another and survive.

This makes no sense. It’s quite common for a particle and its antiparticle to interact without annihilating each other. There’s nothing paradoxical about that.

Later on, we have

The stunning result, though, was that in some places the number of photons was actually less than zero. Fewer than zero particles being present usually means that you have antiparticles instead. But there is no such thing as an antiphoton (photons are their own antiparticles, and are pure energy in any case), so that cannot apply here.

Where to begin? First, I don’t believe the statement that the researchers measured “less than zero” (or even “fewer than zero”) photons. Did they have a photomultiplier tube that clicked -17 times? The actual articles by the two experimental groups contain no claims of anything that could be described in this way.

Second, the idea that fewer than zero particles means antiparticles isn’t really true. There was a theory due to Dirac a long time ago in which positrons (the antiparticles of electrons) were regarded as “holes” that were sort of like negative numbers of electrons, but that theory doesn’t really fit into modern particle physics in any nice way as far as I know.

Finally, can we please ban the phrase “photons are pure energy”? There’s no meaningful sense in which that’s true.

Now I know that science journalists have a tough job, particularly when reporting on very abstract things such as quantum physics. I have no objection at all to their simplifying things to make them clearer. But the above statements aren’t simplifications; they’re just wrong.

These experimental results seem to be a confirmation of a “paradox” in which joint probabilities of two quantities don’t obey the rules that you’d expect classically. Like other similar paradoxes, it’s a nice example of the ways in which quantum mechanics is “spooky,” but I don’t see how the results say anything new about whether reality exists when you’re not looking (whatever that even means).

There’s a common problem in journalism about the foundations of quantum mechanics. As my friend John Baez said a long time ago,

Newspapers tend to report the results of these fundamentals-of-QM experiments as if they were shocking and inexplicable threats to known physics. It’s gotten to the point where whenever I read the introduction to one of these articles, I close my eyes and predict what the reported results will be, and I’m always right. The results are always consistent with simply taking quantum mechanics at face value.

The Economist article goes pretty far (if not all the way) down this route. It takes a clever and interesting experimental result and blows it up into something much spookier than it is. The result does follow precisely the pattern Baez describes: it’s exactly what standard quantum mechanics predicted all along.

Please note that I’m not criticizing the experimenters here: as far as I can tell, they’ve done a difficult and very interesting experiment and described their results accurately. I don’t see anything in their papers that would justify the silly interpretation placed on it by the Economist.

By the way, I got the Baez quote above from a web page by Matt McIrvin which quotes Baez. I can’t find this quote on Baez’s own web site, not that I looked all that hard. Matt has great things to say about the interpretation of quantum mechanics, by the way. If you’re at all interested in this subject, please read this page in addion to — or better yet instead of — the many silly things that have been written on the subject. John Baez has said a bunch of really smart things on the subject in various newsgroup posts over the years as well. He collected some of them here, but as far as I know he hasn’t written up anything more polished.

Who’s the audience?

My friend Walter makes the following very sensible point about my recent article on evolution and the second law of thermodynamics:

The paper about thermodynamics and evolution is neat. Though of course it will not convince any creationists as their argument was never truly science based anyway.

This is certainly true. I don’t harbor any hope that even a single creationist will read the article and see the error of his ways. So then who is this article written for, and what’s the point of writing it? There are actually a few answers.

1. The people who are most likely to read the article are people with an interest in the teaching of physics. It’ll appear in the American Journal of Physics, which is kind of a funny journal: it publishes some articles explicitly about physics education research (comparing different instructional techniques, etc.), but its main emphasis is articles about physics, written in a way that are of interest to physics educators. The main reason I wrote the article is because I think that it raises some interesting physics points that educators may find useful. In particular, I like the fact that you can use back-of-the-envelope estimates using standard undergraduate statistical mechanics techniques to get an answer to this interesting question. I wish I’d worked all this out before the last time I taught statistical mechanics: I would have liked to use it in my class. I expect I’ll get another chance. Anyway, I hope that other statistical mechanics instructors find it useful.

2. My article is a followup to an earlier article, which I really liked but which had an unfortunate error in it. I wanted to set the record straight.

3. Despite Walter’s correct assertion that I’m not going to win any creationist hearts and minds with this article, I do hope it fits in in a tiny way to the ongoing arguments on this subject. Walter’s quite right that fundamentally the creationists’ argument is non-scientific, but on the other hand creationists do frequently try to make scientific (or scientific-seeming) arguments in support of their beliefs. In particular, the claim that there is a conflict between evolution and the second law is a scientific claim, and the right way to refute it is scientifically.

When creationists make scientific claims, they like to use the traditional signs of scientific authority to bolster these claims. For instance, they prominently refer to the academic degrees and positions held by their advocates. Moreover, they use the peer-reviewed literature, and prominently note that they’re doing so. I’m not criticizing them for doing this: peer review (for all its flaws) is the main way that scientific quality is evaluated. But this does suggest that, when a claim is made in the creationism/evolution debates that has the form of a scientific argument but is scientifically incorrect, it’s worthwhile to have an authoritative, peer-reviewed refutation of it. When a creationist tries to use the authority of the peer-reviewed literature to make an incorrect point, a scientist can fight fire with fire.

Another tactic used by creationists (and other people who reject well-established science) is to claim that scientists are unwilling to even look at arguments that go against the standard orthodoxy. That’s another reason it’s worthwhile to examine the creationist argument and take it seriously enough to show why it’s wrong.

The intended audience is not the already-convinced creationist, of course, but the innocent bystander who may not know much about the subject and who hasn’t made up his mind. I don’t expect such a person to read the article (although if they’ve had enough undergraduate-level physics, it’d be great if they did); I want scientists and science teachers who are talking to such people to be able to say, “Yes, scientists have looked carefully at this argument, taken it seriously, and shown why it’s incorrect.”

Failed satellite launch

I asked my brother Andy what the consequences for climate science would be from the failed launch of the Orbiting Carbon Observatory satellite this week. He pointed me to this, which has a lot of good information, both on this satellite and on the difficulty of doing science with space-based instruments in general.

For those who don’t know about it, RealClimate.org is full of great stuff.

Does peer review work?

Recently, I’ve been spending a lot of time with the peer review process. I’ve been revising a couple of papers in response to comments from the referees, and at roughly the same time I’ve been acting as referee for a couple of articles.

Some journals send each article just to a single referee, while others use multiple referees per article. In the latter case the author and the editor can get some idea of how well the process works by how consistent the different referee reports are with each other. In particular, if one referee says that a paper has a fundamental flaw that absolutely must be fixed, while the other notices nothing wrong, it’s natural to wonder what’s going on.

In fact, by keeping track of how often this sort of thing occurs, you can estimate how good referees are at spotting problems and hence how well the peer review process works. Let’s consider just problems that are so severe as to prevent acceptance of the paper (ignoring minor corrections and suggestions, which are often a big part of referees’ reports). Suppose that the typical referee has a probability p of finding each such problem. If the journal sends the paper to two referees, then the following things might happen:

- Both referees miss the problem. This happens with probability (1-p)2.

- One referee finds the problem and the other misses it. The probability for this is 2p(1-p).

- Both referees find the problem. The probability here is p2.

A journal editor, or an author who’s written a bunch of papers, can easily estimate the ratio of the last two options: when one referee finds a problem, how often does the other referee find the same problem? From that ratio you can solve for p and know how well the referees are doing.

In my experience, I’d say that it’s at least as common for just one referee to find a problem as it is for both referees to find it. That means that the typical referee has at best a 2/3 chance of finding any given problem. And that means that the probability of both referees missing a problem (if there is one) is at least (1/3)2, or 1/9. I’ll leave it up to you to decide whether you think that’s a good or bad success rate.

The main thing that made me think of this is that in one referee report I sent off recently, I pointed to what I think is a major, must-fix error, and I’m willing to bet the other referee won’t mention it. That’s not because the other referee is a slacker — I don’t know who it is, or even whether this particular journal uses multiple referees. It’s because the paper happens to have a problem in an area that I’m unusually picky about. It’s bad luck for the authors that they drew me as a referee for this paper. (But, assuming that I’m right to be picky about this issue — which naturally I think I am — it’s good for the community as a whole.)

This sort of calculation shows up in other places, by the way. I first heard of something like this when my friend Max Tegmark was finishing his Ph.D. dissertation. He got two of us to read the same chapter and find typos. By counting the number of typos we each found, and the number that both of us found, he worked out how many undiscovered typos there must be. I don’t remember the number, but it wasn’t small. On a less trivial level, I think that wildlife biologists use essentially this technique to assess how efficient their population counting methods are: If you know how many bears you found, and how many times you found the same bear twice, you can work out how many total bears there are.

More on the colliding satellites

The National Weather Service says

PUBLIC INFORMATION STATEMENT NATIONAL WEATHER SERVICE JACKSON KY 1145 PM EST FRI FEB 13 2009 ...POSSIBLE SATELLITE DEBRIS FALLING ACROSS THE REGION... THE NATIONAL WEATHER SERVICE IN JACKSON HAS RECEIVED CALLS THIS EVENING FROM THE PUBLIC CONCERNING POSSIBLE EXPLOSIONS AND...OR EARTHQUAKES ACROSS THE AREA. THE FEDERAL AVIATION ADMINISTRATION HAS REPORTED TO LOCAL LAW ENFORCEMENT THAT THESE EVENTS ARE BEING CAUSED BY FALLING SATELLITE DEBRIS. THESE PIECES OF DEBRIS HAVE BEEN CAUSING SONIC BOOMS...RESULTING IN THE VIBRATIONS BEING FELT BY SOME RESIDENTS...AS WELL AS FLASHES OF LIGHT ACROSS THE SKY. THE CLOUD OF DEBRIS IS LIKELY THE RESULT OF THE RECENT IN ORBIT COLLISION OF TWO SATELLITES ON TUESDAY...FEBRUARY 10TH WHEN KOSMOS 2251 CRASHED INTO IRIDIUM 33.

That’s pretty amazing.

Update: Well, it would be if it were true. Turns out it’s just meteors.

As you were.

Boom!

I know that space debris is a real and worsening problem, but I have to admit that my first reaction to hearing that two satellites had just collided was “Cool!”

Britain envy

There’s nothing in US journalism anything like Ben Goldacre’s Bad Science columns for the Guardian. Check them out. The point of the column is to tear into bad science and especially bad science reporting. He writes with humor (or is it humour?) and attitude. He’s not afraid to get a bit technical (although with great clarity, I think) when the situation calls for it.

In a way, he has nothing to complain about: he lives in a country with both the Guardian and the Economist, which have much better science reporting than anything we’ve got here.

Science and stimulus

The Senate is considering removing a bunch of science funding from the stimulus package. I don’t pretend to know much about the economics, and I freely admit that as someone who gets funded by NSF I have an interest in this, but still I’d like to argue that removing these funds is a bad idea.

You want shovel-ready? It’s hard to get much more shovel-ready than an NSF grant. A lot of the money in a typical grant goes to fund jobs for several people, including students and postdocs, who will start work pretty much right away. Some goes to equipment, which again will start to be spent pretty quickly.

Some of the money goes to overhead for the host institution. When that’s a public university, that’s a great outcome too, as these universities are going to be squeezed by state budget cuts and an increase in students taking shelter from the job market. In the case where the host institution is a rich private university, I’ll grant that sending overhead there isn’t necessarily the highest priority, although it’s far from the worst thing you can do with the money. Anyway, public universities that really need the money far outweigh rich private schools that don’t.

In addition to being shovel-ready, of course you want the money to go to worthwhile things. I’ll just point out that a strong case can be made that funding basic science is an investment that pays back in future economic growth.

Finally, let me point out that there are a lot of great grant proposals being submitted to the funding agencies that are denied, not for lack of merit but for lack of funds. The first link above says that 1/4 of NSF proposals are funded. I’ve been on several grant review panels in the past few years, and it’s always been more like 1/6. Believe me, there are a lot of extremely worthy ideas in some of the unfunded 5/6 of the proposals.

Now’s a good time to write and call your senator to let them know what you think about science funding. People I’ve talked to in Washington say that calls like this really do make a difference. If you’re in Virginia, your senators are Mark Warner (202-224-2023) and Jim Webb (202-224-4024). If you’re not in Virginia, you can look up how to contact your senators.

Update: The compromise bill passed by the Senate includes most of the original science funding.